How Web Scraping Can Support Macroeconomics and Be Used for Greater Good

Scientists have carefully looked at the use of web scraping, and web scraping has had its praises sung for business applications. We play no small part in that ourselves.

The world of business, however, isn’t just about turnover, profit, and the hustle. There’s some common good to be done as well. But hesitancy to take the plunge and deploy web scrapping for the common good could be driven by several reasons.

Primarily, hesitancy to web scrapping is at least in part due to the “wild west” legal situation of the industry. Regulations and legislation have been a little sluggish, as our legal counsel would note.

Slow adoption doesn’t mean it’s not going to happen, eventually. And it will happen because there’s plenty of utility for web scraping in the public sector.

With some care, we’ll delve into a much-debated topic, both by professionals and laymen, on web scraping and how it can support macroeconomics and be used for the greater good.

Macroeconomics and Modeling

Web scraping refers to the various methods used to collect information from across the internet and extract data from a website. It builds the data scaffolding we never had before, being used to collect data about businesses, prices, and numerous other factors to track the slowly emerging changes.

Macroeconomics, on the other hand, is a branch of economics concerned with large-scale or general economic factors, such as interest rates and national productivity. It relies heavily on mathematical modelling to aggregate changes in the economy such as unemployment, inflation, growth rate, and gross domestic product (GDP).

Macroeconomics is often criticized for attempting to draw real-world conclusions from these models. In other words, attempting to fit conclusions from exceedingly simple systems to complex ones. Yet macroeconomics can be used for good using web scraping.

To a lot of people, macroeconomic approaches feel a little suspect. The discipline heavily relies on mathematical modeling, which may look like a fancy word for “oversimplification” to some. Basic supply and demand models are the most popular examples.

Markets are significantly more complex and slower, the argument goes, than these macroeconomic models might imply. Supply doesn’t match demand instantaneously as there’s a certain delay and pass-through rate.

An esteemed example, however, has been the Consumer Price Index (CPI). The Bureau of Labor Statistics (BLS) started tracking CPI back in 1918 as a way to measure inflation. In a simplified sense, CPI tracks the changes of an allocated and economically weighted basket of goods across the country.

I should note that I’m not doing the complexity of CPI calculations justice. They include changing shopping and behavior patterns and numerous other mathematical instruments that inch the model closer to reality.

Nevertheless, the Consumer Price Index is probably the most widely criticized metric. Some have criticized the choice of the basket of goods such as the removal of more volatile products from the CPI. Others criticize the weighting and substitution practices used in calculations since the 90s. Finally, some countries take completely different approaches to CPI.

In Alex M. Thomas’ Macroeconomics: An Introduction, he states that India uses several different metrics as signals for price levels. These indicators are the Wholesale Price Index (WPI) and CPI with various derivatives. India calculates CPI for industrial, rural, and agricultural laborers separately.

All of these are hedged against a base year. While they do have criteria to assess years, the usage of a base economic year is still somewhat susceptible to subjectivity. After all, at some point the base year has to be changed, which should move all indices accordingly.

In the end, every way to calculate CPI takes a slice of data out of the real world and attempts to make the conclusions as accurate as possible. Partly, modeling is a lack of the ability to acquire and analyze data.

Unfettered Access to Data

Back in the day, causal determinists famously believed that if we knew everything there was to know, there would be no randomness. Coin flips could be predicted with perfect accuracy as long as the data was available.

Causal determinism has experienced a lot of changes since then. It has gone down a road a little more complicated than the initial stance. It, however, is partly reflective of modeling – if we knew everything there was to know, many, if not all, mathematical models would be obsolete.

CPI, I believe, collects data in such a manner, because getting information about all or a significant portion of goods across an entire country used to be nigh impossible. So, some deliberate and calculated liberties had to be taken.That may not be as relevant anymore.

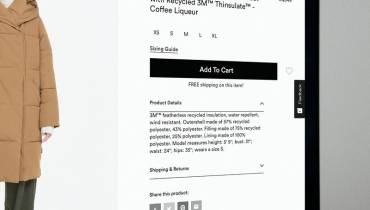

Today, web scraping can be used to acquire as much data as necessary. While physical stores might be out of the question, with the overall dominance of ecommerce, calculating prices online is no longer such an arduous task. Often, all it takes is just a scraper API that’s built for ecommerce.

Billion Prices and Other Projects

There have been successful attempts at using web scraping to predict inflation and CPI. The Billion Prices Project (BPP) did exactly that and led to the development of three other projects of which two provide accurate inflation tracking in countries where governments might not be as willing.

BPP is particularly interesting as it launched way back in the day – in 2007. Web scraping hadn’t then reached the heights of development it has now. Researchers today wouldn’t have to develop everything from scratch. Scraping solution providers could give them the opportunity to focus on macroeconomic research rather than overseeing development.

Unfortunately, whatever they did seems to be either lost to time or never fully disclosed. In one of the articles, they mention an online appendix for those more eager to learn about web scraping. The document only holds surface-level information about the process, however.

A short summary of the process might still be necessary. Cavallo and his team created automated programs that would run through major online stores and collect the prices of millions of products every day. Such data would be stored for comparison purposes.

Several years down the line, BPP had proven itself to be of immense practical use. Alberto Cavallo, the head of the project, has published numerous studies that show how web scraping can support macroeconomic research or even render some historical approaches obsolete.

His Using Online Prices for Measuring Real Consumption Across Countries is one such example. By scraping and collecting historical pricing data from 11 countries and a total of nearly 100 000 individual products, the authors were able to model consumption levels across countries. There was little-to-no estimation lag (i.e., the delay between data collection and publication), no need for CPI extrapolation, and the results were close to official reports.

The benefits don’t end there, however. As the authors themselves note, the data is more granular and delivers additional insights. For example, relative price levels across countries can be gleaned by using web scraped data – something that cannot be done with CPI.

The Billion Prices Project created several offshoots, some of which are still alive and well. Some of them are intended to solve specific problems in macroeconomic research. Others shed light on data that would otherwise be unavailable such as Inflacion Verdadera Argentina.

Due to some political complexities, Argentina’s government had started to publish inflation data that seemed suspect. Inflacion Verdadera Argentina was initiated to uncover the truth. Cavallo’s team discovered that the numbers were not just a little suspicious. The official reported inflation rate was three times lower than the one found by his team.

Finally, the last BPP offshoot, PriceStats, could solve the CPI delay problem. The US Bureau of Labor Statistics states that CPI is calculated based on data from about three years ago. Web scraping can remove any data lag and provide more accurate and timely data.

PriceStat’s data is unfortunately shared only on a request basis. While I haven’t acquired such data, they have a great example of aggregate inflation with the project’s and the official CPI data displayed. Both the problem and the solution are clearly displayed in their full glory.

Finally, web scraping seems like it could have a long-term career in macroeconomics. Cavallo recently published research stating that due to the sudden shift in shopping patterns caused by COVID-19, the usual CPI measures were inaccurate.

A Window to the Micro in Macroeconomics

There’s more to be done. Metrics, calculated through data collection methods, shouldn’t be taken as a way to “show the government.” Independent research can be used to enhance already existing methods and deepen our understanding of current macroeconomic phenomena.

Web scraping builds the data scaffolding we never had before. Monetary policy, a topic so complex and intricate that it by itself stands as an argument to split the Central Bank from any government entity, is turning the economic dials of an entire country (or even a group of countries). Small changes in things such as money supply or interest rates can have an enormous impact on economic health.

Unfortunately, we often don’t get to notice the direct impact of monetary policy. There are, usually, several types of lags outlined. Regardless of how many there truly are, the end-result is the same—the full impact of monetary policy isn’t immediately visible.

Additionally, there’s a gap in data. Models are used to predict outcomes. While they are mostly accurate, web scraping can do better. It can bridge the gap between monetary policy and its effects.

Web scraping can be used to collect data about businesses, prices, and numerous other factors in order to track the slowly-emerging changes. Such data would allow us to delve deeper into the minutia of monetary policy and to truly wrestle out the real-world effects of even the smallest changes.

In the end, there are two important and unique aspects of web scraping that remarkably enhance the possibilities of economic research. First, data is instant. Economics has been reliant on historical data that’s always a little late to the party, since it has to go through many parties and institutions before being made accessible.

Second, it’s more reliable. All data collected is “as is.” While data manipulation might be infrequent, there’s always the possibility of error, intentional or not. With web scraping, researchers can check and verify all information themselves independently.

Conclusion

Web scraping lies within the domain of data and data can be useful for more than just profit-driven purposes. Proper usage of these tools can aid in research and policy-making. Indeed, macroeconomics is just one discipline where automated data collection can be used for the greater good.

![Cycling Outdoors: How to Ensure Your Safety on Bicycle Trips [node:title]](/sites/default/files/styles/video_thumbnail_bottom/public/river_bike_bridge_water_man-35713.jpgd_.jpg?itok=AH16Gc3d)